Windows 2008 R2 Remote Desktop Services single signon provides the users the ability to login to RD Web Access and launch applications without having to provide login credentials twice. While single sign on is great it does not just work out of the box, there are a few things you need to do to configure single sign on. These steps are documented on the following blog post:

http://blogs.msdn.com/b/rds/archive/2009/08/11/introducing-web-single-sign-on-for-remoteapp-and-desktop-connections.aspx

What is not documented however is for single sign on to work by default, users must login with:

DOMAIN\Username

For example:

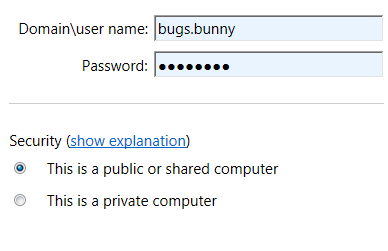

If a user logs in with just the user name such bugs.bunny as show in the screenshot below, when the user enters the RD Web Access and attempts to launch a remote application the user will receive the error below.

Error experienced:

Your computer can't connect to the remote computer because an error occured on the remote computer that you want to connect to. Contact your network administrator for assistance.

Note: This error message is generic and is presented for a wide range of problems relating to RDS.

For my this Active Directory we want users to login by simply entering their username, we do not want users to have to specify their domain name. To do this perform the following procedure:

%windir%\Web\RDWeb\Pages\The

Language of Your Location\

http://blogs.msdn.com/b/rds/archive/2009/08/11/introducing-web-single-sign-on-for-remoteapp-and-desktop-connections.aspx

What is not documented however is for single sign on to work by default, users must login with:

DOMAIN\Username

For example:

If a user logs in with just the user name such bugs.bunny as show in the screenshot below, when the user enters the RD Web Access and attempts to launch a remote application the user will receive the error below.

Error experienced:

Your computer can't connect to the remote computer because an error occured on the remote computer that you want to connect to. Contact your network administrator for assistance.

Note: This error message is generic and is presented for a wide range of problems relating to RDS.

For my this Active Directory we want users to login by simply entering their username, we do not want users to have to specify their domain name. To do this perform the following procedure:

1.

Login to the Remote Desktop Web Access role-based server with local/Domain

administrative permissions.

2.

Navigate to the following location:

3.

Backup the login.aspx file to another location.

4.

Right click the login.aspx file, and select Edit. The file will be opened with

Edit status in your default HTML editor.

5.

Change the original code section:

input

id=”DomainUserName” name=”DomainUserName” type=”text” class=”textInputField”

runat=”server” size=”25” autocomplete=”off” /

to be:

input

id=”DomainUserName” name=”DomainUserName” type=”text” class=”textInputField”

runat=”server” size=”25” autocomplete=”off” value=”domainname\” /